How do we get a robot to respond like it’s human? Matthias Scheutz is on the case

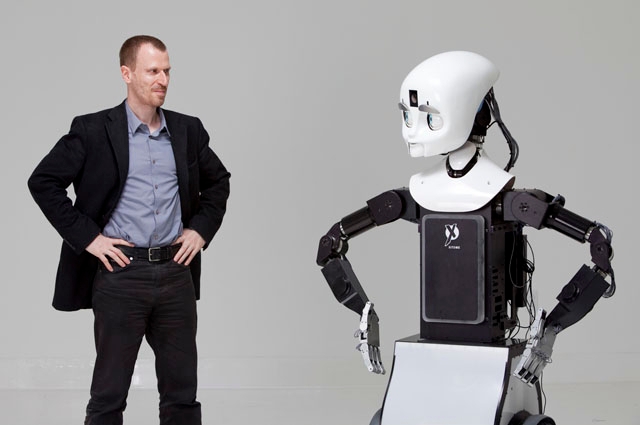

“Ultimately, I want to understand what it takes to build a mind,” says Matthias Scheutz, here with his robot Cindy. “Not a human mind, necessarily, but a mind.” Photo: Alonso Nichols

Matthias Scheutz is constantly thinking. As he describes his work, his cadence quickens. His arms swing in midair. His eyes widen, and he takes on a distant look, as if his mind is 10 steps ahead of what he’s saying.

That may well be. Scheutz, an associate professor of computer science in the School of Engineering, has two Ph.D.s (one in philosophy and one in cognitive and computer science), and he pours this intellectual power into one pursuit.

“Ultimately, I want to understand what it takes to build a mind,” he says with a grin. “Not a human mind, necessarily, but a mind.”

The “mind” Scheutz is talking about isn’t biological. It’s digital. His specialty is creating robots that think and act like humans, or at least come close—which will be an important trait, he says, as these devices become more deeply embedded in our daily lives.

To a certain extent, they already are. While today’s world isn’t exactly the future predicted in the Jetsons cartoon, millions of us own domestic robots like the Roomba, the popular autonomous vacuum cleaner. Scheutz calls these devices “social” robots. They live and work among us, and although they’re unable to interact with humans in a meaningful way, he says, they often seem sentient. Watching a Roomba scoot around your living room, it’s remarkably easy to think of it as another living creature, to project animal desires and needs onto it, even talk to it as if it could respond.

“People fall in love with their Roomba,” he says. “They treat it almost like a pet. Some people even clean for it because they feel bad leaving a mess. Yet it’s an appliance; it doesn’t even know we exist.”

Chatting with Machines

At the moment, our interaction with social robots is completely one-sided. These devices simply don’t have the means to understand our words and gestures. That’s something Scheutz wants to change. If we can create devices that seem more humanlike in their response to us, he reasons, they may be well suited for more complex work with people, such as tending to the basic needs of hospital patients or the elderly at home.

“They could watch patients for the whole day, make sure they take their medicine, help them get dressed, get out of bed, all of the kinds of things a human would do,” says Scheutz, who also has an appointment in the psychology department. “A robot could help these people be more autonomous.”

The ease of working with a robot that we can command verbally might be handy in other environments, as well. Scheutz envisions them aiding search-and-rescue missions in collapsed buildings, for example. “They’d be able to talk with first responders and collaboratively explore the rubble, the way humans would, without putting the rescuers in danger,” he says.

Tasks such as this, however, require robots that can interact with humans on our level. They’d have to comprehend not just colloquial speech, for instance, but gestures, facial expressions and emotional inflection. They’d have to use reason and understand context. In short, Scheutz says, they’d need to master the staggeringly subtle and complex ways of human interaction.

Creating a robot that can do all these things is fantastically difficult. Roboticists around the world are working on parts of the puzzle, but usually in a way that Scheutz considers piecemeal: one team may be improving machine vision, while another focuses on language comprehension or spatial awareness. While each group is doing important research, all the pieces will have to come together seamlessly for a robot to interact with humans in the way Scheutz envisions.

Software on the Mind

To move toward that goal, his research team at Tufts is developing a sort of universal software that lets different robotic systems talk to one another and share information. It’s the driving force behind Scheutz’s latest creation, a tall, gangly robot named Cindy. She looks at once human and alien—a towering metal frame with motorized arms, set atop a two-wheeled base and crowned with an oversized white plastic head.

Her appearance is striking, but her brain—the complex array of software controlling her—is what really sets her apart. Using software they developed, Scheutz’s team has pushed her abilities to new heights.

A video that Scheutz made demonstrates how. Cindy is tasked with finding a first-aid kit in an otherwise empty room. She doesn’t know what it looks like, and says so. A grad student clarifies: “It’s a white box with a red cross on it.” Cindy considers this for a moment, then spots her target across the room. She blinks thoughtfully, grasps the handle, retrieves the object and turns slowly towards the door.

In human terms, this is nothing impressive. For a robot, though, it’s the equivalent of Olympic-level gymnastics. Defining and locating an object on the fly takes an astonishing degree of processing ability. To do so, a robot like Cindy must be able to “see” its surroundings, understand the subtle cues of spoken commands, know when it doesn’t recognize an object, request a definition and finally piece together what actions it needs to take based on the information available.

“For all of a robot’s components to work together, there has to be some sort of common currency,” says Evan Krause, assistant director of Scheutz’s lab. That’s where the software Scheutz’s group developed comes in. “We use it to pull together dozens of systems, like computer vision, natural language comprehension and belief modeling—the ability to form a mental model of what other people know or don’t know—to accomplish a single task,” he says.

This is especially significant, Krause says, because it allows a robot to create meaning from small bits of information. Using the Tufts software, it can “learn” new words and actions on the fly, building its vocabulary—and, in a way, its intelligence.

The software is not yet a perfect system, however, and its ability to let robots recognize things such as gestures and context is limited. “If Cindy were watching us, she may be able to say, ‘Evan is a person talking, and David is a person writing something down,’ but she might not be able to put together that what we’re doing is an interview,” says Krause.

It may be a long time, possibly decades, before robot “intelligence” can achieve that sort of high-level knowledge. But that doesn’t bother Scheutz. He maintains a seemingly bottomless enthusiasm for his research. After all, each robot he programs and tests brings him that much closer to his lofty goal—creating a digital mind.

David Levin is a freelance science writer based in Boston.